Installing VLLM on Ubuntu 24: A Step-by-Step Guide

What if you could serve large language models with significantly faster inference times and better resource utilization? This is the core promise of the vLLM library, a high-performance Python tool designed specifically for this demanding task.

We created this guide to walk you through the complete setup process. The installation is hardware-dependent, meaning your system’s configuration—be it an NVIDIA or AMD card, a TPU, or even a CPU-only setup—directly influences the steps you’ll take.

Understanding your hardware capabilities before you begin is crucial for optimal performance. This comprehensive guide provides tailored instructions for each scenario. Whether you need a simple pip-based method or a more advanced, customized deployment from source, we cover the essential methods to ensure a successful setup.

Our goal is to balance accessibility for newcomers with the detailed information experienced users require for production deployments. By the end, you will be equipped to leverage the full potential of this powerful library.

Key Takeaways

- vLLM is a specialized Python library for efficient LLM serving and inference.

- The installation process varies significantly based on your specific hardware (GPU, TPU, CPU).

- Proper setup is critical for achieving optimal performance and faster inference times.

- This guide covers multiple methods, from simple pip installs to building from source.

- Understanding your system’s resources is the essential first step before beginning.

- Tailored instructions ensure compatibility with various hardware configurations.

Preparing Your Ubuntu 24 Environment

Ensuring your system meets all prerequisites will prevent common installation issues and optimize performance. We begin by verifying that your computing environment has the necessary components for a successful setup.

System Requirements and Prerequisites

Your hardware configuration determines the specific requirements. For CPU-based operations, the AVX512 instruction set architecture is mandatory for efficient computations.

Check your processor capabilities using terminal commands. Verify available memory and storage space to ensure adequate resources. These specifications directly impact deployment success.

Updating and Upgrading the OS

Begin by refreshing your package lists with standard apt commands. Upgrade existing installations to ensure all components are current. This foundational step creates a stable base for subsequent operations.

Proper Python 3 configuration is essential since this is a Python-based library. We recommend specific versions for optimal compatibility. Compiler requirements include gcc/g++ version 12.3.0 or higher for source builds.

Setting up environment variables and dependencies completes the preparation phase. This comprehensive approach ensures your system environment is fully ready.

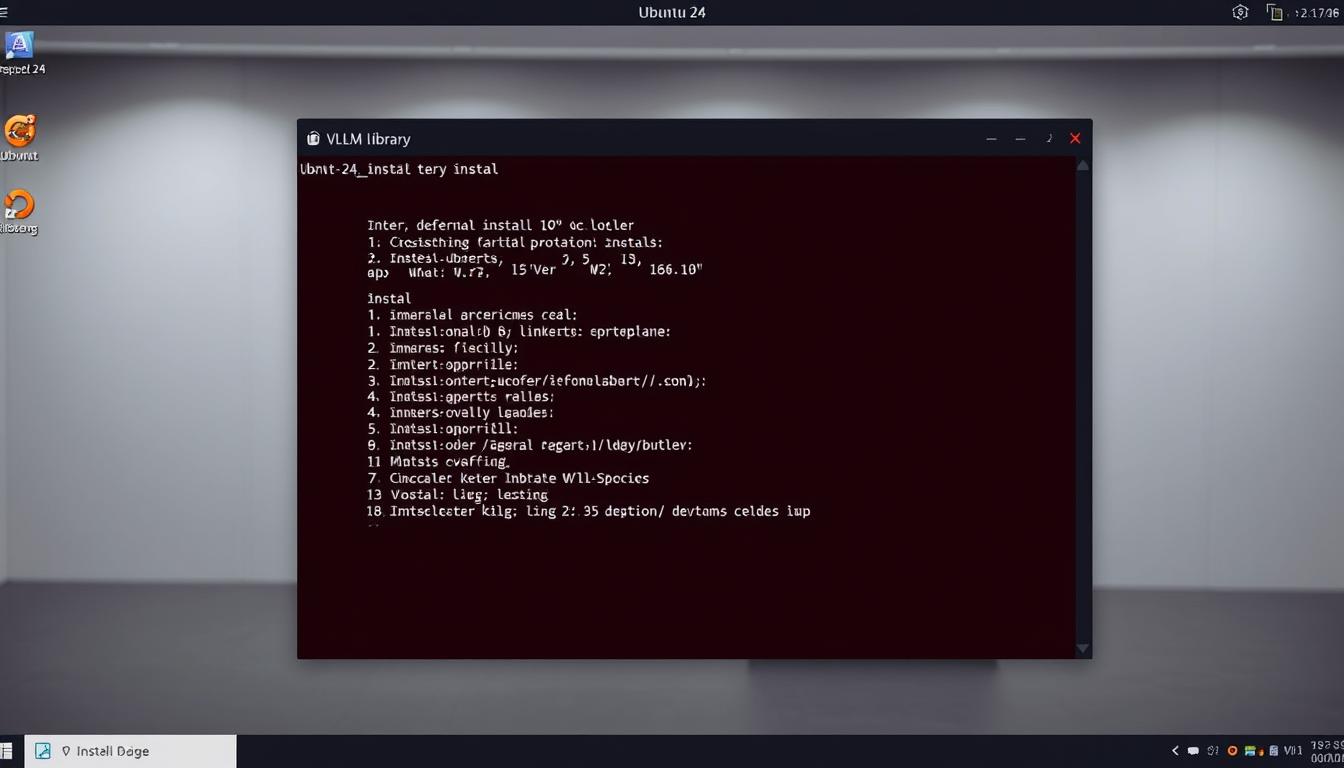

Install VLLM on Ubuntu 24

The most direct path to get the library running involves using Python’s pip package manager. This method represents the quickest way for most standard configurations.

We begin by ensuring pip is current. Run the command to upgrade it, avoiding compatibility issues from outdated tools.

Next, install the essential foundation packages. These include wheel, packaging, ninja, and a specific version of setuptools. NumPy is also a fundamental dependency required beforehand.

The final command for the library itself is hardware-dependent. The exact syntax varies for NVIDIA GPUs, AMD hardware, or CPU-only setups. For CPU deployments, you may need to use a special flag for PyTorch.

After the process completes, verification is crucial. Open a Python environment and attempt to import the module. This confirms all components are recognized correctly.

Should you encounter problems like network timeouts or permission errors, we provide guidance. Checking your internet connection and using virtual environments often resolves these common hurdles.

Using Docker for Quick Installation

For those seeking a clean, reproducible setup, container-based deployment is an excellent choice. Docker provides an isolated platform that encapsulates all dependencies, eliminating conflicts with your host system.

Building with the Dockerfile

We begin by constructing the container image from the official source. The build command specifies a CPU-specific configuration file and allocates shared memory.

Execute this command in your terminal:

1 | docker build -f Dockerfile.cpu -t vllm-cpu-env --shm-size=4g . |

The –shm-size=4g parameter is crucial for efficient model loading and inter-process communication during inference operations.

Running the Docker Container

Once the image builds successfully, you can run the container with optimized settings. The command includes several important flags for performance and cleanup.

Use this basic example:

1 | docker run -it --rm --network=host vllm-cpu-env |

The –rm flag ensures automatic cleanup after use. For advanced cpu optimization on multi-socket systems, you can specify core affinity using optional parameters.

This containerized approach simplifies dependency management, making it easier to deploy consistent environments across different systems.

Building vLLM from Source

Compiling directly from the source code offers the highest degree of customization and performance optimization. This method is ideal when you need to tailor the library for a specific CPU architecture or enable advanced features not available in pre-built packages.

Setting Up GCC, Python, and Other Dependencies

We begin by ensuring the system has the correct build tools. A modern compiler is essential. We recommend using gcc/g++ version 12.3.0 or newer.

After updating your package lists, you can install the compiler packages. The next step involves configuring them as the default system compiler. This ensures the build process uses the correct version.

With the compiler ready, we focus on the python environment. First, upgrade pip to the latest version. Then, install essential build tools like wheel, packaging, and ninja.

A specific version of setuptools and numpy are also required. These foundational packages prepare your system for the next phase of the install process.

Compiling and Installing the CPU Backend

The final step is to build the library specifically for cpu operations. This requires installing CPU-specific dependencies from a requirements file. We use a special index URL to fetch the optimized PyTorch packages.

The actual build command is straightforward but crucial. You must set an environment variable to target the CPU device before running the setup script. This compiles the backend with the correct optimizations.

An important note is that the cpu backend uses BF16 (Brain Floating Point 16) as its default data type. This provides a good balance of performance and precision on modern processors.

This approach gives you full control, which is beneficial for managing complex software environments. The build script also auto-detects advanced CPU extensions for even better performance, or you can force-enable them if needed.

Implementing Performance Enhancements>

Achieving maximum inference speed and stability requires fine-tuning specific system and library settings. We focus on optimizations that extract the best possible performance from your hardware, particularly for cpu-based deployments.

Leveraging AVX512, BF16, and IPEX

Modern processors benefit greatly from specialized instruction sets. The library’s cpu backend uses BF16 by default for an optimal balance of precision and speed.

For an extra performance boost on Intel architectures, we recommend the Intel Extension for PyTorch (IPEX). Versions 2.3.0 and later integrate automatically, providing advanced vectorization and memory management.

Optimizing Memory with TCMalloc and Environment Variables

Efficient memory allocation is critical. We strongly advise installing TCMalloc for high-throughput scenarios. After installation, you configure it by setting the `LD_PRELOAD` environment variable.

Another key setting is `VLLM_CPU_KVCACHE_SPACE. This environment variable controls the cache size for parallel requests. A larger value, like 40 for 40 GB, allows more concurrent processing but needs sufficient system RAM.

For the best results, isolate CPU cores for compute threads and consider disabling hyper-threading on bare-metal servers. On multi-socket systems, use tools like `numactl` to bind processes to local memory nodes.

Advanced Configuration and Inference Options

Configuring the inference server properly transforms a basic installation into a high-performance deployment ready for real-world applications. We focus on optimizing server settings and environment variables to maximize throughput and efficiency.

Configuring the vLLM Serve Command and Model Options

The serve command launches an OpenAI-compatible API server with extensive customization options. Key parameters include –model for specifying the model path and –task for defining inference types like generation or embedding.

Data type selection through –dtype affects memory usage and precision. Performance settings like –max-num-seqs control concurrent request handling. The –device parameter explicitly targets specific hardware platforms.

Customizing Environment Variables for Better Performance

Environment variables provide fine-grained control over server behavior and resource allocation. They work alongside command-line arguments to optimize memory management and throughput.

Proper configuration balances latency and resource utilization across different workload patterns. This approach ensures stable operation under varying request volumes.

For complex setups involving specialized hardware, our advanced configuration guide provides detailed examples. These demonstrate optimal parameter combinations for specific deployment scenarios.

Conclusion

With the installation phase successfully behind you, the focus shifts to leveraging your optimized environment for maximum productivity. You now have a powerful platform ready to serve large language models efficiently.

The performance enhancements we covered—from AVX512 instructions to BF16 data types—ensure your system extracts maximum capability from available hardware. Proper memory management through environment variables and TCMalloc maintains stability under heavy loads.

The advanced configuration options provide granular control over model behavior and output characteristics. This flexibility allows you to fine-tune inference for specific use cases and workload patterns.

Remember that optimization is an iterative process. The examples we provided serve as starting points that should be adjusted based on your actual requirements and hardware capabilities.

We encourage continued exploration of the library’s documentation as the project evolves with regular updates. You’re now well-equipped to build applications that leverage these powerful inference capabilities for development, research, or production deployments.

FAQ

What is the primary advantage of using vLLM for LLM inference?

Can I install and run vLLM on a system without a dedicated GPU?

What is the simplest method to get vLLM running quickly for testing?

How do environment variables like `VLLM_CPU_ISA` improve performance?

Why is TCMalloc recommended for memory optimization?

What should I do if I encounter issues during the pip installation process?

- About the Author

- Latest Posts

Mark is a senior content editor at Text-Center.com and has more than 20 years of experience with linux and windows operating systems. He also writes for Biteno.com